How Noise Isolation Improves Transcription Accuracy

Noise isolation is key to making audio recordings clearer, which directly improves transcription accuracy. By reducing background sounds like traffic, conversations, or equipment noise, speech becomes easier to process for transcription systems. This reduces errors, omissions, and misinterpretations, which is critical in fields like healthcare, law, and media.

Key Takeaways:

- Healthcare: Eliminates transcription errors that could lead to medical mistakes, saving lives and reducing legal risks.

- Legal Industry: Ensures precise transcripts for court proceedings and depositions, preventing case misinterpretations.

- Media: Improves workflows by providing accurate transcripts for content repurposing, SEO, and accessibility.

Research Insights:

- Noise isolation lowers Word Error Rate (WER), especially in noisy environments.

- AI-driven methods outperform older noise reduction techniques, as they preserve speech details while filtering noise.

- Some modern transcription models, like Deepgram's Nova-3, handle noisy audio without pre-filtering, avoiding over-filtering issues.

Challenges:

- Over-filtering can remove important speech elements.

- Advanced AI models may require more processing power and introduce latency.

- Noise isolation methods vary in effectiveness depending on the type of noise and audio environment.

Tools like OneStepTranscribe integrate noise isolation into transcription workflows, offering reliable results even with challenging audio conditions. It supports various file formats, ensures security with encryption, and handles large files without requiring sign-ups.

Research Data: How Noise Isolation Affects Transcription Accuracy

Research highlights that noise isolation significantly improves transcription accuracy by minimizing interference from background sounds. This is particularly important in fields like healthcare and law, where precise transcriptions are essential. Studies have shown that noisy environments pose major challenges for AI transcription systems, leading to higher error rates when background noise isn't adequately managed.

The Speech Robust Bench (SRB) provides a comprehensive framework for evaluating transcription models under 114 different noise conditions and environmental disturbances. By introducing noise at four distinct severity levels, SRB helps researchers analyze how various noise scenarios impact transcription performance. These effects are measured using standardized error metrics.

Word Error Rate (WER) Improvements

The Word Error Rate (WER) is a key metric for assessing transcription accuracy, capturing the discrepancies between spoken words and their transcriptions. Studies consistently show that applying noise isolation techniques reduces WER across diverse audio conditions.

Metrics such as WER, WER Degradation, and normalized error rates allow for standardized comparisons by factoring in speech quality degradation scores from models like DNSMOS and PESQ. These tools quantify how noise isolation minimizes transcription errors.

In SRB evaluations, Whisper large-v2 emerged as the most resilient model against non-adversarial noise, outperforming even newer models in noisy environments.

Performance Across Different Audio Quality Levels

Performance varies notably depending on audio quality. SRB evaluates transcription models using both clean speech datasets like LibriSpeech and TEDLIUM, as well as noisy recordings from datasets such as Common Voice, CHiME-6, and AMI. This broad testing approach demonstrates how noise isolation fares across a wide range of real-world audio conditions.

For high-quality audio with minimal noise, noise isolation yields only modest gains. However, its impact becomes much more pronounced with low-quality recordings that include heavy background noise, overlapping conversations, or environmental sounds.

Interestingly, modern end-to-end speech recognition systems often perform better with unaltered audio rather than noise-reduced versions. This is because traditional noise reduction techniques can strip away subtle speech cues that overlap with background noise, as both often share similar frequency ranges.

"Noise reduction often harms speech-to-text accuracy by removing valuable audio cues that modern AI systems rely on to transcribe speech." - Deepgram

Deepgram's Nova-3 model exemplifies this trend, as it is specifically designed to handle noisy environments and overlapping speech without requiring pre-filtering. This approach has proven more effective than preprocessing audio with noise reduction techniques.

Additionally, research suggests that larger ASR models are generally more adept at handling complex acoustic scenarios, including background noise, compared to smaller models - even when the smaller models are trained with more extensive datasets. This indicates that model size plays a critical role in managing acoustic challenges.

Finally, studies show that AI-driven noise isolation methods outperform traditional signal processing techniques. These advanced systems leverage extensive datasets to distinguish between speech and background noise, preserving essential speech characteristics while filtering out irrelevant sounds. Unlike broad-spectrum noise suppression, which can degrade speech quality, AI approaches maintain the integrity of the audio while enhancing transcription accuracy.

Methods for Background Noise Isolation in Transcription

AI transcription systems use a range of techniques to separate speech from background noise. Each method has its strengths and weaknesses, which explains why some transcription services perform better in noisy or complex environments.

Signal Processing Methods

Traditional signal processing techniques are the backbone of noise isolation. For instance, spectral subtraction works by analyzing the frequency spectrum to estimate and remove noise during silent moments. Similarly, adaptive filtering adjusts in real time to handle changing noise patterns.

Another method, Wiener filtering, focuses on minimizing the difference between clean speech and the filtered output. Using statistical models of both speech and noise, this approach optimizes the filtering process.

These methods are effective for reducing steady background noises like air conditioning hums or distant traffic. However, they often struggle with non-stationary noise - sounds that change over time, such as conversations, music, or machinery that starts and stops. While traditional methods provide a solid foundation, they are limited when it comes to handling more dynamic or complex noise environments.

AI-Based Noise Reduction Models

Modern AI techniques have significantly advanced noise isolation. Tools like RNNoise, which uses recurrent neural networks, and other deep learning models with convolutional or transformer architectures, leverage large datasets to learn how to separate speech from noise.

AI goes beyond basic noise removal through speech enhancement models, which not only filter out noise but also reconstruct parts of speech that may have been masked. This allows these systems to recover lost speech information, something traditional methods often fail to achieve.

AI-based methods also excel at dealing with overlapping frequency ranges. While traditional techniques might remove all sounds within certain frequencies (including parts of speech), AI models can selectively preserve speech while filtering out noise in the same range.

The most advanced systems use end-to-end neural networks, which combine noise isolation and transcription into a single process. These networks can directly transcribe speech from noisy audio without requiring separate noise-cleaning steps, improving both speed and accuracy.

Trade-Offs in Noise Isolation

Both traditional and AI-based approaches have their pros and cons, and choosing the right method often involves balancing trade-offs.

One major challenge is over-aggressive filtering, where noise reduction systems remove not only background sounds but also critical speech elements, leading to incomplete transcriptions.

Processing latency is another factor, especially for real-time transcription. Advanced AI models often require more computation, which can delay results. In contrast, traditional methods are faster but less effective in complex noise scenarios.

Hardware requirements also vary widely. Basic techniques like spectral subtraction can run on simple devices, while AI models often need powerful processors and significant memory to function efficiently.

Another issue is artifact introduction, where noise isolation systems unintentionally create distortions like metallic-sounding speech or echoes. These artifacts can confuse transcription systems more than the original noise itself.

Modern systems increasingly rely on noise-robust models, which are trained to handle noisy audio directly without aggressive preprocessing. This approach tends to preserve more speech details and avoids the distortions that traditional noise reduction might introduce.

Ultimately, the success of a noise isolation method depends on the type of noise and the acoustic environment. For steady noises like a fan, traditional filtering works well. However, for environments with multiple speakers or unpredictable noise, AI-based methods are far more effective.

sbb-itb-003b25c

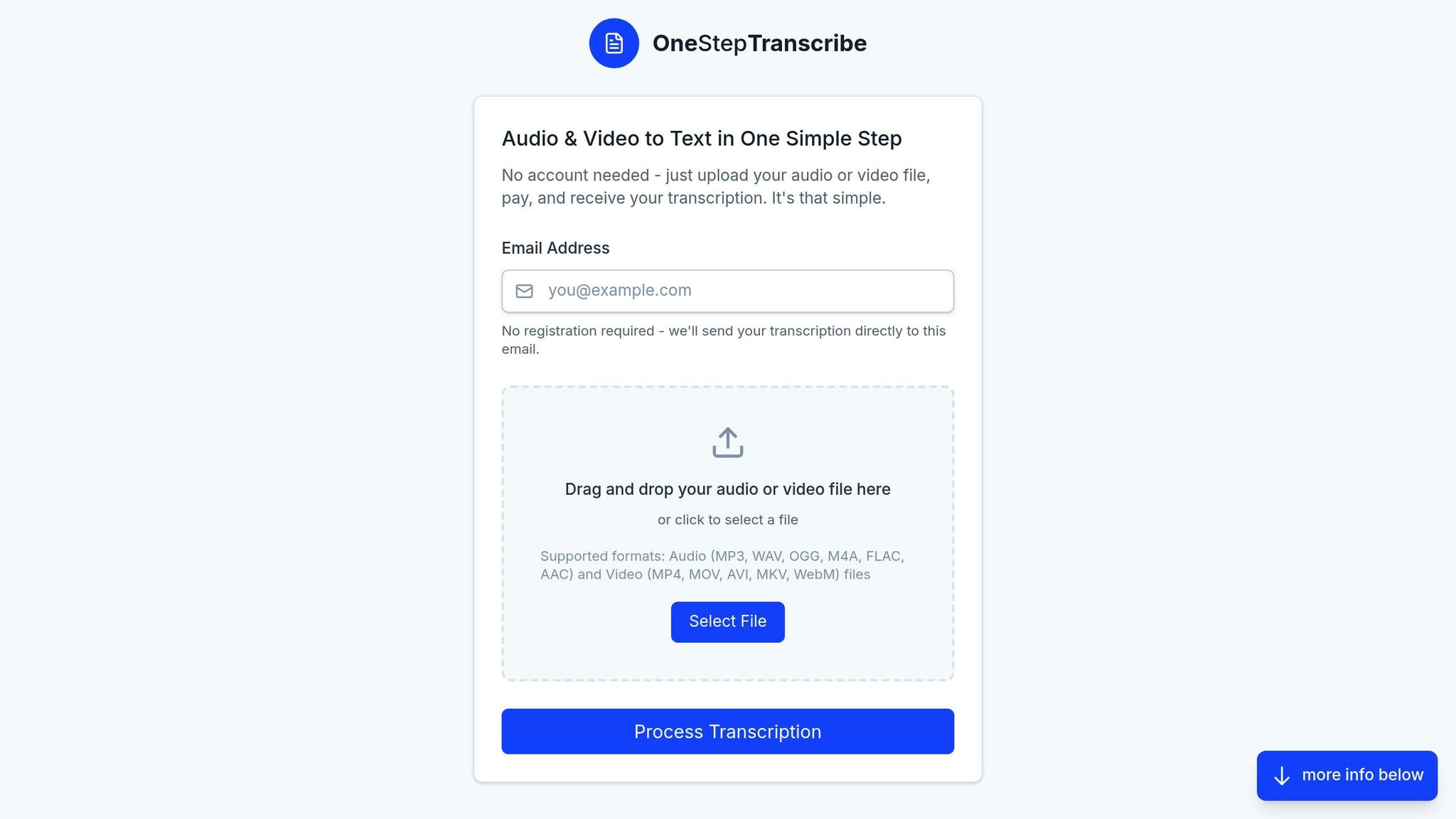

How OneStepTranscribe Uses Noise Isolation

OneStepTranscribe takes noise isolation to the next level by weaving advanced techniques directly into its transcription process. This approach doesn't just boost transcription accuracy - it ensures that even files up to 5GB can be processed without requiring users to sign up, making it a practical solution for both casual users and professionals.

The system is built to handle a wide range of ambient sounds, ensuring spoken words are captured with clarity, even in noisy environments. This flexibility means that whether you're dealing with a bustling background or minor interruptions, the transcription remains reliable.

Supporting popular formats like MP3, WAV, MP4, and MOV, the platform is equipped to manage varying audio qualities. Features like speaker identification and automatic timestamps work hand-in-hand with noise isolation, ensuring clear differentiation between speakers and precise timing. Together, these tools maintain accuracy across diverse file types, reinforcing the service's reliability.

Security is also a top priority. Uploaded files are encrypted and automatically deleted after transcription, giving users peace of mind. Final transcripts are available in multiple formats, including PDF, Word, Markdown, and CSV, making it easy to integrate them into any workflow. Improved audio clarity not only reduces errors but also ensures the original content is preserved.

Common Use Cases

Noise isolation becomes crucial in situations where background sounds threaten the clarity of recorded speech. For instance, remote interviews conducted over video calls often pick up keyboard clicks or household noises. Similarly, webinars and conference calls, with their mix of multiple speakers and inconsistent audio quality, benefit greatly from enhanced clarity.

Field recordings, legal depositions, and podcast post-production also rely heavily on noise isolation. These scenarios often involve recordings made in unpredictable environments, where separating speech from background noise is essential for accuracy.

These examples highlight how advanced noise isolation directly improves transcription quality, addressing a variety of challenges with ease.

Why Noise Isolation Matters for Transcription

When it comes to transcription, noise isolation plays a crucial role in ensuring accuracy. Clear audio directly translates to more precise transcriptions, which is especially important for professionals who rely on these documents in their day-to-day work.

In fields like law, medicine, or business, where every word matters, noise isolation saves valuable time. Accurate transcriptions mean less back-and-forth for corrections, making workflows smoother and more efficient in high-pressure environments.

There’s also a financial upside to consider. Poor audio quality often results in additional costs for editing or redoing transcriptions. Services like OneStepTranscribe, which use advanced noise isolation techniques, help users avoid these extra expenses. Whether you’re handling a single critical file or managing regular transcription tasks, better audio clarity means greater value for your money.

On top of cost savings, security and ease of use are enhanced. OneStepTranscribe not only reduces background noise but also incorporates encryption and automatic file deletion to keep data secure. Plus, the platform can handle files up to 5GB without requiring registration, offering both professional-grade quality and convenience.

The benefits of noise isolation extend to a wide range of scenarios. Whether it’s cleaning up a podcast recording or ensuring accurate transcripts of remote interviews, modern noise isolation technology adapts to different audio conditions, maintaining consistent quality throughout.

FAQs

How does noise isolation help improve transcription accuracy?

Noise isolation plays a key role in improving transcription accuracy by cutting down background noise that can disrupt speech recognition. By honing in on the speaker's voice and filtering out distractions, transcription systems can interpret spoken words more effectively, resulting in fewer mistakes and a reduced Word Error Rate (WER).

This becomes especially important in noisy environments, where clearer audio input enables AI-powered transcription tools to produce more accurate and dependable results.

How do traditional noise reduction methods compare to AI-based models for improving transcription accuracy?

Traditional methods for reducing noise, such as filtering and spectral subtraction, can improve audio clarity by cutting down on background interference. The downside? These approaches sometimes strip away essential speech details, which can hurt transcription accuracy.

AI-driven noise reduction models take a smarter approach. Using machine learning, they excel at distinguishing between speech and noise, often providing better results in noisy or tricky environments. That said, these models aren't perfect right out of the box. They need fine-tuning to ensure they don't overdo it and accidentally erase key parts of the speech. When adjusted correctly, AI models tend to handle complex noise scenarios far better than traditional techniques.

Why do larger ASR models handle background noise better than smaller ones, even with extensive training data?

Larger ASR models perform exceptionally well in noisy settings because they are designed to handle intricate patterns and identify features that resist interference from noise. Their sophisticated architecture incorporates elements that reduce the effects of background noise, leading to more accurate transcriptions.

These models also have the advantage of greater processing capacity, which helps them adjust to a wide range of audio conditions. This makes them highly effective at separating speech from noise, ensuring reliable performance even in environments with challenging acoustics.